What is PolyBase?

In this Article, we will discuss new feature called PolyBase with Microsoft SQL Server 2016. Why Microsoft has introduced in the SQL Server and why it is needed.

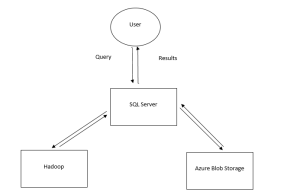

If you see from Data Warehouse scope is progressing and we see that there is an increasing volume of data from a lot of sources. All of these data is landing in some cost effective storage system like Hadoop and also cloud solutions like Azure Blob Storage.

Microsoft SQL Server wants to reach out new data storage like Hadoop and Azure Blob Storage. For this purpose, Microsoft has introduced new technology called PolyBase that allows to query data residing in HDFS or Azure Blob Storage.

PolyBase in SQL Server 2016

User can submit Queries normal T-SQL Statements in SQL Server and access the data residing in Hadoop or in Azure Blob Storage and combine the data residing in the SQL Server Instance. Then get the results back to the end user. Now users can analyze data coming from heterogeneous system by using T-SQL Statements in SQL Server.

PolyBase introduces the below capabilities

1) Ad-Hoc Queries on Hadoop Data.

2) Import / Export data from Hadoop/Azure Storage to SQL Server for persistent storage, queryable storage.

3) Seamless BI Integration like External Tables, File Formats and Data Sources.

Let’s do a quick Demo

Let me show what I was talking about in terms of SQL Server Constructs that we have introduced.

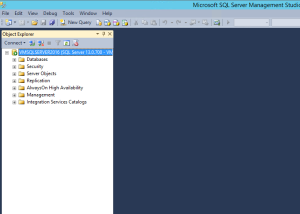

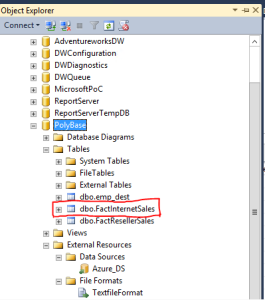

If you see below Diagram, I have connected to SQL Server instance opened which is running under SQL Server 2016 CTP3.0

You can see Databases under your SQL Server Instance. Let’s look PolyBase Database for the purpose of this demo.

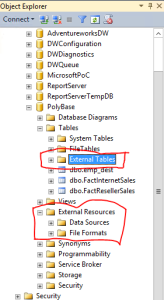

I have created PolyBase Database for this demonstration. If you expand that, you will find new node called External Resources. Once you expand the External Resources, you will find Data Sources and File Formats.

Similarly, if you expand Tables node, you will find new construct External Tables.

These are the two constructs introduced in SQL Server 2016 with respect to PolyBase. I will quickly show you how will these constructs will point to Azure Blob Storage.

Now let’s look at creating External Tables under Tables Folder and Data Sources, File Formats under External Resources.

CREATE MASTER KEY ENCRYPTION BY PASSWORD = ‘p@55w0rd123’ — any password

Creates a database credential. A database credential is not mapped to a sever login or database user. The credential is used by the database to access to the external location anytime the database is performing an operation that requires access.

Create Database Scoped Credential AzureStorageCredential

With Identity = ‘Srikanth’, — any name

Secret =’pzCp341iFP6uJUPkWplpJdcN95KTSJOk67ojncpspWB3vrVy2gTJYkih

191lENSKJ4Kl9BVK1jQrTQY80g1GSA==’;

— primary access Key of azure blob storage

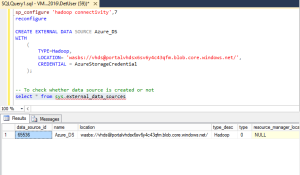

— Option 7: Azure blob storage (WASB[S])

sp_configure ‘Hadoop connectivity’, 7

Reconfigure

Hadoop Connectivity specifies the type of Hadoop data source for all connections from PolyBase to Hadoop clusters or Azure blob storage (WASB). This setting is required in order to create an external data source for an external table

Option 0: Disable Hadoop connectivity

Option 1: Hortonworks HDP 1.3 on Windows Server

Option 1: Azure blob storage (WASB[S])

Option 2: Hortonworks HDP 1.3 on Linux

Option 3: Cloudera CDH 4.3 on Linux

Option 4: Hortonworks HDP 2.0 on Windows Server

Option 4: Azure blob storage (WASB[S])

Option 5: Hortonworks HDP 2.0 on Linux

Option 6: Cloudera 5.1 on Linux

Option 7: Hortonworks 2.1 and 2.2 on Linux

Option 7: Hortonworks 2.2 on Windows Server

Option 7: Azure blob storage (WASB[S])

— creating data source to specify in which container files are stored under storage name

Now tell the SQL Server where Azure Blob Storage that we connect to. That is what you will do with the next statement called External Data Source.

CREATE EXTERNAL DATA SOURCE Azure_DS

WITH

(

TYPE=Hadoop,

LOCATION= ‘wasbs: //ContainerName@StorageAccountName.blob.core.windows.net/’,

CREDENTIAL = AzureStorageCredential

);

— To check whether data source is created or not

select * from sys.external_data_sources

— creating file format to specify how each filed is delimited either by comma(,), tab (\t), pipe (|) etc.

I want to tell SQL Server how the data is stored with structured and un-structured format.

Create EXTERNAL FILE FORMAT [TextfileFormat] WITH (FORMAT_TYPE = DelimitedText,

FORMAT_OPTIONS (FIELD_TERMINATOR = N’,’, USE_TYPE_DEFAULT = True))

GO

— To check whether file format is created or not

select * from sys.external_file_formats

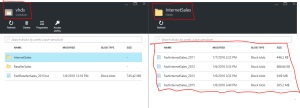

Let’s create an External Table referring to the files stored in the container (vhds).

As the final step, you are creating an external table. Here, we are telling SQL Server what are the columns, datatypes in the table.

It is similar to normal table that we create in SQL Server with additional attributes.

Attributes

- Location will specify the path where files are stored i.e.; path on the Azure Blob Storage.

- Data Source, where is the data residing on.

- File Format, what is the shape of data.

- Other optional parameters like Reject Type, Reject Value, Reject Sample Value.

Here, the structure of the extFactInternetSales has got created but data is not stored in this table.

This external table will point to the folder in the container, when the user issues the Select Statement in the SQL Server Instance. Then it will retrieve data from all the files (FactInternetSales_2011, FactInternetSales_2012, FactInternetSales_2013 and FactInternetSales_2015) from folder (InternetSales) in the container.

In the same Polybase, I have other table with name ‘FactInternetSales’ within the SQL Server Instance.

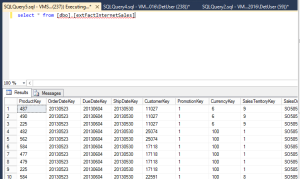

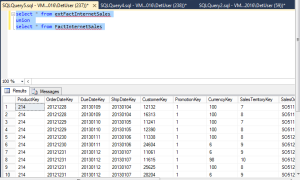

Let’s now query the external table (referring to files storage under Azure Blob Storage in the container) and internal table with in the SQL Server Instance.

select * from extFactInternetSales

union

select * from FactInternetSales

Here, extFactInternetSales table is referring to files stored in Container with data for years 2011,2012,2013 and 2015 (Jan to June)

FactInternetSales is having data for year 2015(July to December).

To show entire data to the user, am joining both tables using union operator as show above.

Thanks for that !

But it doesn’t work for me if I try to create the external datasource with a sql user (no windows authentication). There I get the error “Access to the remote server is denied because no login-mapping exists.”.

But I want to run jobs with sql user and no windows login. Can you help me there ?