Hello,

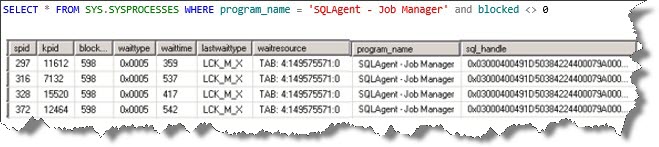

Recently we had an issue with chain blocking and most of the jobs are complete but do not finish. When observed in Job Activity Monitor most of the jobs say “SQL Server Job Performing Completion Actions”. This doesn’t give you any idea about what is the job doing in the backend to finish. When checked in sys.sysprocesses table we will observe that the blocking and the blocker statements are coming from the program “SQLAgent – Job Manager”. Again this doesn’t tell you which job is being blocked and blocking. The interesting things to note will be the sql_handle from the sys.sysprocesses when the blocking is happening.

So let’s start with querying sys.sysprocesses. You will observe the lastwaittype to be LCK_M_X and the waitresource is TAB: 4:xxxxxxxxx:xx. When you query for this object name in MSDB database (DB ID 4 from wait resource) it will return ‘sysjobhistory’.

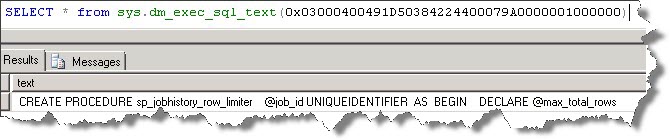

It is evident that the blocking is happening when trying to clear the history of the job once it is completed. When we check the sql_handle and get the statement it is actually running the procedure “sp_jobhistory_row_limiter”.

Interesting? Not yet. When we check the code of this procedure it actually runs the below statement where it holds an exclusive table lock (TABLOCKX) in a TRANSACTION which is more concerning.

BEGIN TRANSACTION SELECT @current_rows_per_job = COUNT(*) FROM msdb.dbo.sysjobhistory with (TABLOCKX) WHERE (job_id = @job_id) -- Delete the oldest history row(s) for the job being inserted if the new row has -- pushed us over the per-job row limit (MaxJobHistoryRows) SELECT @rows_to_delete = @current_rows_per_job - @max_rows_per_job IF (@rows_to_delete > 0) BEGIN WITH RowsToDelete AS ( SELECT TOP (@rows_to_delete) * FROM msdb.dbo.sysjobhistory WHERE (job_id = @job_id) ORDER BY instance_id ) DELETE FROM RowsToDelete; END -- Delete the oldest history row(s) if inserting the new row has pushed us over the -- global MaxJobHistoryTableRows limit. SELECT @current_rows = COUNT(*) FROM msdb.dbo.sysjobhistory SELECT @rows_to_delete = @current_rows - @max_total_rows IF (@rows_to_delete > 0) BEGIN WITH RowsToDelete AS ( SELECT TOP (@rows_to_delete) * FROM msdb.dbo.sysjobhistory ORDER BY instance_id ) DELETE FROM RowsToDelete; END IF (@@trancount > 0) COMMIT TRANSACTION

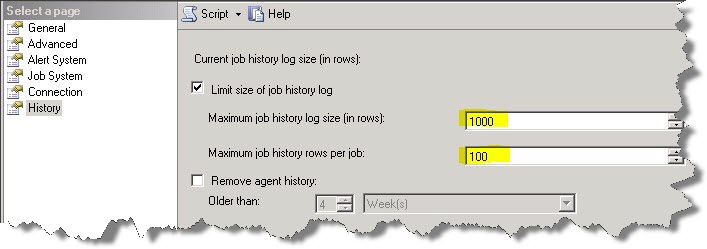

So if we observe there are two parameters which are needed in above code.

- @max_rows_per_job

- @max_total_rows

Both these values are actually what we set on the agent properties -> history tab.

You can also check these values from registry from below keys. Actually this is where the procedure reads the values from.

Now we understand what is actually happening in the back ground when the job is performing completion action.

But our question is still not answered. Why are we observing huge blocking only now if this is default behavior? So let’s go back to the table on which this is happening, sysjobhistory. By default there will be two indexes on this table.

Now we understand what is actually happening in the back ground when the job is performing completion action.

But our question is still not answered. Why are we observing huge blocking only now if this is default behavior? So let’s go back to the table on which this is happening, sysjobhistory. By default there will be two indexes on this table.

When we checked the index fragmentation on this table the non-clustered index nc1 was 98% fragmented. The solution is simple. We have to do an ONLINE REBUILD of the index. But how did we got there? On further analysis we came to the root cause. This SQL Server Agent was not set with any history limits initially when the system was setup. So the sysjobhistory had piled up and when we changed the row limit settings per job and total limit, it started cleaning old data and led to the fragmentation. SO before I end this blog post lets summarize on few points that can help us avoid such problems.

- Always limit the rows per job and total rows for all jobs.

- Have a maintenance job to check and rebuild/reorganize indexes on the sysjobhistory table.

Happy Learning 🙂

Regards

Manohar Punna

Like us on FaceBook | Join the fastest growing SQL Server group on FaceBook

Follow me on Twitter | Follow me on FaceBook