Azure Data factory is a cloud based Data Integration Service that Orchestrates and automates the Movement and transformation of data.

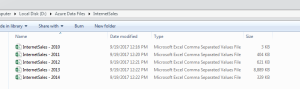

Step 1: I will place the multiple .csv files in the local drive in the “D:\Azure Data Files\InternetSales” as shown in the below screen shot

Step 2: I will create an Azure Data Lake store in the Azure as show in the below screen shot

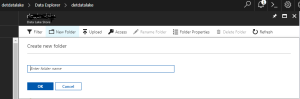

Click on the Data Explorer, it will take you to new window where you can create a new folder in the Azure Data Lake Store.

By clicking on the New Folder, it ask you to enter name of the folder i.e..; “InternetSales”. Click OK.

Step 3: Azure Data Lake Store uses Azure Active Directory for authentication. Before authoring an application that works with Azure Data Lake Store or Azure Data Lake Analytics, you must decide how to authenticate your application with Azure Active Directory (Azure AD). The two main options available are:

- End-user authentication

- Service-to-service authentication

Create and configure an Azure AD web application for service-to-service authentication with Azure Data Lake Store using Azure Active Directory. Here, we will see how to create Azure AD App.

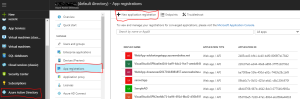

Click on the Azure Active Directory, it will open a new blade

Click on the “New application registration”

Click on create button.

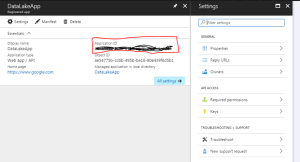

Click on “DataLakeApp” which is highlighted in Red Color.

Application ID is used as Service Principal ID in the Azure Data Lake Store Linked Service.

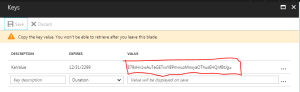

Click on “Keys”, this will allow to generate the Service Principal Key as shown in the below screen shot

Note: Application ID and Keys are used as Service Principal Id and Service Principal Key

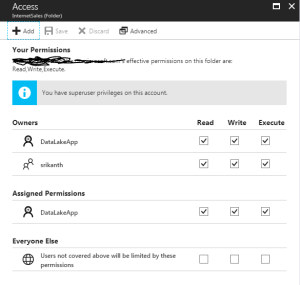

Steps 4: Authenticating “InternetSales” folder in the Azure Data Lake Store using Azure Active Directory.

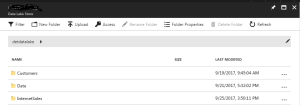

In the Azure Data Lake Store, Click on Data Explorer. It will show all the folders created in the Azure Data Lake Store.

Click on the InternetSales folder,

Click on “Access” Button

Click on the Select User or Group and search the Web App created in the Azure Active Directory and set the permissions as show below

Click OK.

Then you will see the permissions on the particular folder in Azure Data Lake Store

Step 5: Download and Install Data Management Gateway on machine, where the files have to be copied into Azure Data Lake Store.

Step 6: Using Azure Data Factory, let us create

- A Linked Service for Azure Data Lake Store

- A Linked Service for On-Premise File System

- A Dataset for Azure Data Lake Store

- A Dataset for On-Premise File System

- A Pipeline to group Dataset of Azure Data Lake Store and On-Premise File System and Schedule at the regular intervals

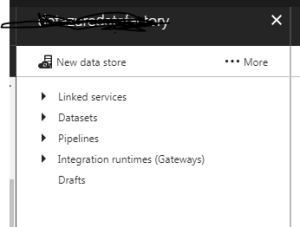

Click on Author and deploy to create Linked Services, Data Sets and Pipelines.

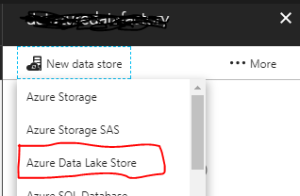

Select Linked services, click on New data store à Azure Data Lake Store

Click on Azure Data Lake Store.

{

"name": "AzureDataLakeStoreLinkedService",

"properties": {

"description": "",

"hubName": "detazuredatafactory_hub",

"type": "AzureDataLakeStore",

"typeProperties": {

"dataLakeStoreUri": "adl://<AzureDataLakeStoreName>.azuredatalakestore.net",

"servicePrincipalId": "XXXX",

"servicePrincipalKey": "XXX"

}

}

}

servicePrincipalId and servicePrincipalKey are the Application ID and KeyValues generated for the Web App Registration in Azure Active Directory.

Select Linked services, click on New data store à File System

{

"name": "InternetSalesOnPremisesFileServerLinkedService",

"properties": {

"description": "",

"type": "OnPremisesFileServer",

"typeProperties": {

"host": "D:\\Azure Data Files\\InternetSales",

"gatewayName": "XXXX",

"userId": "Domain\\UserID",

"password": "Password"

}

}

}

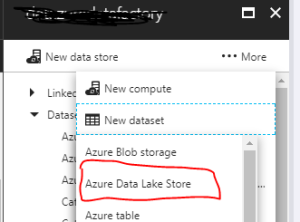

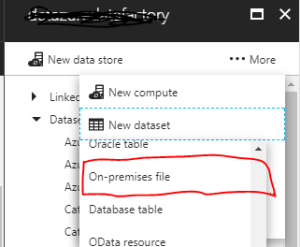

Click on “More”, Select New Dataset à Azure Data Lake Store

{

"name": "AzureDataLakeStoreDatasetTemplate",

"properties": {

"structure": [

{

"name": "DateKey",

"type": "Int64"

},

{

"name": "FullDateAlternateKey",

"type": "Datetime"

},

{

"name": "DayNumberOfWeek",

"type": "Int32"

},

{

"name": "EnglishDayNameOfWeek",

"type": "String"

},

{

"name": "SpanishDayNameOfWeek",

"type": "String"

},

{

"name": "FrenchDayNameOfWeek",

"type": "String"

},

{

"name": "DayNumberOfMonth",

"type": "Int32"

},

{

"name": "DayNumberOfYear",

"type": "Int32"

},

{

"name": "WeekNumberOfYear",

"type": "Int32"

},

{

"name": "EnglishMonthName",

"type": "String"

},

{

"name": "SpanishMonthName",

"type": "String"

},

{

"name": "FrenchMonthName",

"type": "String"

},

{

"name": "MonthNumberOfYear",

"type": "Int32"

},

{

"name": "CalendarQuarter",

"type": "Int32"

},

{

"name": "CalendarYear",

"type": "Int32"

},

{

"name": "CalendarSemester",

"type": "Int32"

},

{

"name": "FiscalQuarter",

"type": "Int32"

},

{

"name": "FiscalYear",

"type": "Int32"

},

{

"name": "FiscalSemester",

"type": "Int32"

}

],

"published": false,

"type": "AzureDataLakeStore",

"linkedServiceName": "AzureDataLakeStoreLinkedService",

"typeProperties": {

"fileName": "",

"folderPath": "Date/{Year}/{Month}/{Day}",

"format": {

"type": "TextFormat",

"rowDelimiter": "\n",

"columnDelimiter": ",",

"firstRowAsHeader": true

},

"partitionedBy": [

{

"name": "Year",

"value": {

"type": "DateTime",

"date": "SliceStart",

"format": "yyyy"

}

},

{

"name": "Month",

"value": {

"type": "DateTime",

"date": "SliceStart",

"format": "MM"

}

},

{

"name": "Day",

"value": {

"type": "DateTime",

"date": "SliceStart",

"format": "dd"

}

}

]

},

"availability": {

"frequency": "Minute",

"interval": 15

},

"external": false,

"policy": {}

}

}

On-Premise File Dataset

{

"name": "OnPremisesFile",

"properties": {

"structure": [

{

"name": "ProductKey",

"type": "Int32"

},

{

"name": "OrderDateKey",

"type": "Int64"

},

{

"name": "DueDateKey",

"type": "Int64"

},

{

"name": "ShipDateKey",

"type": "Int64"

},

{

"name": "CustomerKey",

"type": "Int32"

},

{

"name": "PromotionKey",

"type": "Int32"

},

{

"name": "CurrencyKey",

"type": "Int32"

},

{

"name": "SalesTerritoryKey",

"type": "Int32"

},

{

"name": "SalesOrderNumber",

"type": "String"

},

{

"name": "SalesOrderLineNumber",

"type": "Int32"

},

{

"name": "RevisionNumber",

"type": "Int32"

},

{

"name": "OrderQuantity",

"type": "Int32"

},

{

"name": "UnitPrice",

"type": "Decimal"

},

{

"name": "ExtendedAmount",

"type": "Decimal"

},

{

"name": "UnitPriceDiscountPct",

"type": "Int32"

},

{

"name": "DiscountAmount",

"type": "Int32"

},

{

"name": "ProductStandardCost",

"type": "Decimal"

},

{

"name": "TotalProductCost",

"type": "Decimal"

},

{

"name": "SalesAmount",

"type": "Decimal"

},

{

"name": "TaxAmt",

"type": "Decimal"

},

{

"name": "Freight",

"type": "Decimal"

},

{

"name": "CarrierTrackingNumber",

"type": "String"

},

{

"name": "CustomerPONumber",

"type": "String"

},

{

"name": "OrderDate",

"type": "Datetime"

},

{

"name": "DueDate",

"type": "Datetime"

},

{

"name": "ShipDate",

"type": "Datetime"

}

],

"published": false,

"type": "FileShare",

"linkedServiceName": "InternetSalesOnPremisesFileServerLinkedService",

"typeProperties": {

"folderPath": "D:\\Azure Data Files\\InternetSales",

"format": {

"type": "TextFormat",

"rowDelimiter": "\n",

"columnDelimiter": "\t",

"firstRowAsHeader": true

}

},

"availability": {

"frequency": "Minute",

"interval": 15

},

"external": true,

"policy": {}

}

}

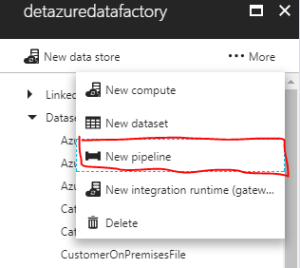

Click on … More to create “New pipeline”

{

"name": "OnpremiseToAzureDataLakeStorePipeline",

"properties": {

"description": "Copy data from a onpremise to Azure Data Lake Store",

"activities": [

{

"type": "Copy",

"typeProperties": {

"source": {

"type": "FileSystemSource",

"recursive": true

},

"sink": {

"type": "AzureDataLakeStoreSink",

"copyBehavior": "PreserveHierarchy",

"writeBatchSize": 0,

"writeBatchTimeout": "00:00:00"

}

},

"inputs": [

{

"name": "OnPremisesFileDataset"

}

],

"outputs": [

{

"name": "AzureDataLakeStoreDatasetTemplate"

}

],

"scheduler": {

"frequency": "Minute",

"interval": 15

},

"name": "OnpremiseToAzureDataLakeStoreActivity"

}

],

"start": "2017-09-20T00:10:00Z",

"end": "2017-09-30T00:10:00Z",

"isPaused": false,

"hubName": "detazuredatafactory_hub",

"pipelineMode": "Scheduled"

}

}

Hi,

I am looking for the same solution as you explain above.

It seems that GUI of Azure data factory has been changed now.

I have an Azure subscription, but the problem is that I can not use that out of my office laptop.

Is there any way to connect from anywhere.